Researchers Find Retraining Only Small Parts of AI Models Cuts Costs and Prevents Forgetting

In today’s fast-moving world of artificial intelligence, keeping AI models current can be both tricky and expensive. Traditionally, updating these models required retraining them entirely—a process that consumes massive amounts of computing power, time, and energy. But recent research shows that retraining just select portions of AI models could be the smarter, more efficient way to go. This approach can save money, reduce energy use, and prevent a problem known as “catastrophic forgetting.”

What is Catastrophic Forgetting?

Catastrophic forgetting happens when an AI model learns new tasks or data but ends up losing previously acquired knowledge. This issue is especially noticeable in:

- Large language models

- Image recognition systems

- Recommendation engines

For example, a model trained to spot social media trends might forget earlier patterns after being updated with new data if retraining isn’t done carefully.

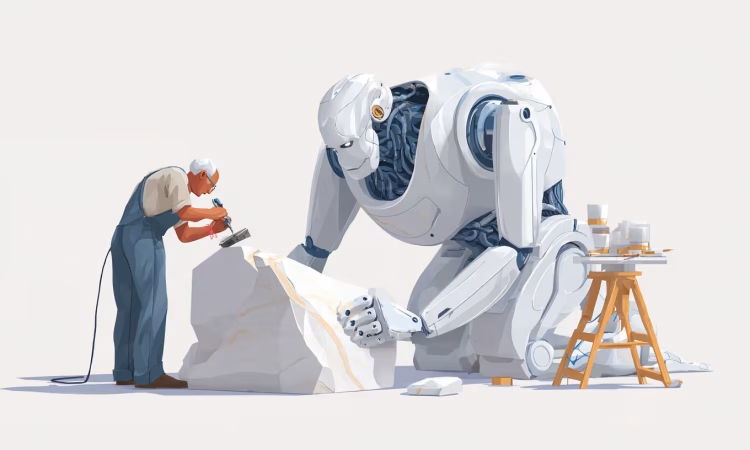

The Breakthrough: Selective Retraining

Researchers are now exploring “selective retraining,” where only small, crucial parts of a neural network are updated instead of the entire system. Neural networks—the backbone of most AI—contain layers and nodes with millions or even billions of parameters. Updating every parameter is resource-intensive, but targeting only those responsible for new knowledge is far more efficient.

Dr. Lena Thompson, a lead researcher, explains:

“AI models aren’t monolithic. Different layers and nodes handle different types of knowledge. By updating only the segments that interact with new data, we can preserve the rest of the model’s knowledge while still keeping it adaptable.”

This strategy reduces computational costs and keeps previously learned skills intact, avoiding the erasure that can occur during full-scale retraining.

Economic and Environmental Benefits

Retraining entire AI models from scratch can cost millions of dollars and consume huge amounts of energy—sometimes equivalent to the annual carbon footprint of several households. As AI adoption grows across industries like healthcare, finance, and e-commerce, these costs can skyrocket.

Selective retraining can:

- Cut operational costs

- Reduce energy consumption

- Maintain higher model performance

Dr. Thompson adds, “It’s a win-win. You save money, reduce energy use, and keep your AI systems performing at their best. This approach could make AI development more sustainable at scale.”

Real-World Applications

The potential of selective retraining spans multiple industries:

- Healthcare: Diagnostic AI systems can integrate new clinical data while retaining established medical knowledge, ensuring accuracy and reliability.

- Natural Language Processing: Language models can adopt new slang, terms, and trends without forgetting older patterns—vital for translation, sentiment analysis, and AI-driven content creation.

- Autonomous Vehicles: Updating only sections of a driving AI related to new traffic patterns or mapped areas can enhance safety and efficiency without retraining the entire system.

Challenges and Limitations

While promising, selective retraining isn’t a one-size-fits-all solution:

- Identifying key segments: Understanding which parts of a network to update requires deep analysis, as some nodes influence multiple functions.

- Model compatibility: Smaller or tightly integrated models may not have clear sections to update selectively.

- Performance risks: Careful testing is needed to ensure updates don’t compromise overall performance.

Despite these challenges, the method introduces flexibility in AI maintenance. Traditionally, AI updates were all-or-nothing. Selective retraining provides a nuanced approach that aligns better with real-world needs.

Looking Ahead

Researchers are now exploring automated methods to determine which parts of a network should be retrained. Machine learning itself could guide selective updates, making the process even faster and more efficient.

This opens doors to incremental, continuous learning, allowing AI models to refresh in manageable steps instead of waiting for large, costly retraining cycles. Experts believe this could fundamentally change AI maintenance.

Rachel Meyer, an AI strategist, notes:

“This approach could redefine how organizations think about AI maintenance. It’s practical, cost-effective, and aligns with sustainability and knowledge preservation goals.”

Conclusion

As AI becomes part of everyday life, keeping models updated efficiently is critical. Selective retraining—updating only small, targeted parts of AI models—offers a way to reduce costs, save energy, and prevent knowledge loss.

While more research is needed, early results are encouraging. This method could reshape how businesses and researchers maintain AI, ensuring systems stay cutting-edge without unnecessary expense or lost knowledge.

In a field known for rapid innovation and high costs, selective retraining offers an elegant solution—allowing AI to evolve smarter, greener, and more economically than ever before.